AI’s creepy control must be open to inspection!

BOSTON, Massachusetts (PNN) - January 1, 2017 - The past year marked the 60th year of artificial intelligence -and, boy, did it have a lively birthday. Pop open a computer science journal on your laptop during 2016 and you’d be assured that not only was progress happening, but it was doing so much, much faster than predicted. Today, AI and algorithms dominate our lives - from the way financial markets carry out trades to the discovery of new pharmaceutical drugs and the means by which we discover and consume our news.

But like any invisible authority, such systems should be open to scrutiny. Yet too often they are not open and we are not even fully aware that such systems play the roles they do. For years now, companies such as Amazon, Google and Facebook have personalized the information we are fed; combing through our “metadata” to choose items in which they think we are most likely to be interested. This is in stark contrast to the early days of online anonymity when a popular New Yorker cartoon depicted a computer-using canine with the humorous tagline: “On the Internet, nobody knows you are a dog.” In 2017, not only do online companies know that we’re dogs, but also our breed and whether we prefer Bakers or Pedigree.

The use of algorithms to control the way that we’re treated extends well beyond Google’s personalized search or Facebook’s customized news feed. Tech giants such as Cisco have explored the way in which the Internet could be divided into groups of customers who would receive preferential download speeds based on their perceived value. Other companies promise to use breakthroughs in speech-recognition technology in call centers, sending customers through to people with a similar personality type to their own for more effective call resolution rates.

It is a mistake to always decry this kind of personalization as a negative. The futurist and writer Arthur C. Clarke once noted that any sufficiently advanced technology is indistinguishable from magic. Most of us will have had the awed feeling of watching a really good magic trick when their smart phone pops up an uninvited but relevant piece of information at just the right moment - like your iPhone remembering where your car is parked.

But the feeling is often tempered by a moment of doubt. Is it a bit creepy that Google knows your favorite football club? Are we being shown only the news that matches Facebook’s vision of who we are? Most of the time we don’t know because the algorithms’ internal workings remain inaccessible to us.

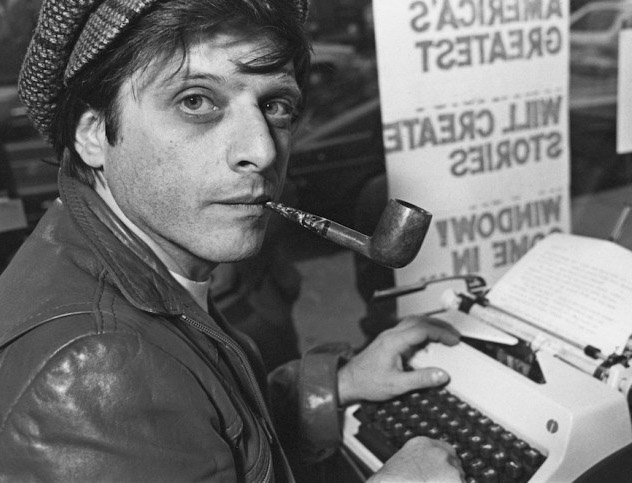

The academic Sherry Turkle made an interesting observation about PCs in the 1980s, when families started buying home computers and endless lines of green text gave way to sumptuous graphical user interfaces. At this point, she has suggested, computers moved from being a hobbyist machine you could open up and physically tinker with to a machine you could learn to operate immediately, even if you didn’t know exactly how it was working. Computers, Turkle playfully noted, begged to be taken not at face value but at interface value.

It doesn’t take much to see that this philosophy has continued to develop over the years. This is particularly true when talking about artificial intelligence systems. Some of today’s most impressive advances in fields such as machine learning rely on tools such as “deep learning neural networks”. These are systems patterned after the way the human brain works but which, ironically, are almost entirely inscrutable to humans. Trained with only inputs and outputs, and tweaking one or the other until the middle part “just works”, human creators have long since sacrificed understanding in favor of results.

Since such tools will increasingly be used for more complex use-cases such as AI-driven warfare, prioritizing patients in hospital, and determining which areas of a city should be most heavily policed, questioning them is essential. We may view computers as coolly objective - hence the science-fiction trope of the machine that gains emotions and then goes wrong - but human bias and error can enter into algorithms, too. It’s when we believe that such systems are beyond question (and lack the means to question them if we change our mind) that things get problematic.

So what’s the answer? This is a more complex challenge than many of the over-the-air quick fixes with which Silicon Valley loves to engage. You can cut down on speech you don’t like on Twitter by banning people who say things you don’t agree with. That’s not so easy when the positives and negatives of technology are so deeply entwined. Technology in this sense is a bit like the political system: there are so many decisions to take that we hand the overwhelming majority of them to someone we trust.

There is some evidence to suggest things are changing. The issue of AI accountability is shaping up to be one of this year’s hot topics, ethically and technologically. Recently, researchers at Massachusetts Institute of Technology’s Computer Science and Artificial Intelligence Laboratory published preliminary work on deep learning neural networks that can not only offer predictions and classifications, but also rationalize their decisions.

Artificial intelligence achieved a lot in 2016. One of the goals in 2017 should be to make its workings more transparent. With plenty riding on it, this could be the year when, to coin a phrase, we begin to take back control.