Artificial intelligence pioneer warns smart computers could doom mankind!

BERKELEY, Kalifornia (PNN) - July 17, 2015 - Artificial intelligence has the potential to be as dangerous to mankind as nuclear weapons, a leading pioneer of the technology has claimed.

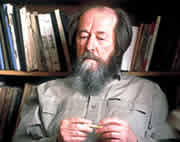

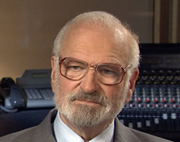

Professor Stuart Russell, a computer scientist who has led research on artificial intelligence, fears humanity might be “driving off a cliff” with the rapid development of AI.

He fears the technology could too easily be exploited for use by the military in weapons, putting them under the control of AI systems.

He points towards the rapid development in AI capabilities by companies such as Boston Dynamics, which was recently acquired by Google, to develop autonomous robots for use by the military.

Professor Russell, who is a researcher at the University of Kalifornia in Berkeley and the Center for the study of Existential Risk at Cambridge University, compared the development of AI to the work that was done to develop nuclear weapons.

His views echo those of people like Elon Musk who have warned recently about the dangers of artificial intelligence.

Professor Stephen Hawking also joined a group of leading experts to sign an open letter warning of the need for safeguards to ensure AI has a positive impact on mankind.

In an interview with the journal Science for a special edition on Artificial Intelligence, Professor Russell said, “From the beginning, the primary interest in nuclear technology was the inexhaustible supply of energy. The possibility of weapons was also obvious. I think there is a reasonable analogy between unlimited amounts of energy and unlimited amounts of intelligence. Both seem wonderful until one thinks of the possible risks. In neither case will anyone regulate the mathematics. The regulation of nuclear weapons deals with objects and materials, whereas with AI it will be a bewildering variety of software that we cannot yet describe. I'm not aware of any large movement calling for regulation either inside or outside AI, because we don't know how to write such regulations.”

This week Science published a series of papers highlighting the progress that has been made in artificial intelligence recently.

In one, researchers describe the pursuit of a computer that is able to make rational economic decisions away from humans while another outlines how machines are learning from “big data”.

However, Professor Russell cautions that this unchecked development of technology can be dangerous if the consequences are not fully explored and regulations put in place.

In April, Professor Russell raised concerns at a United Nations meeting in Geneva over the dangers of putting military drones and weapons under the control of AI systems.

He joins a growing number of experts who have warned that scenarios like those seen in films from Terminator, AI, and 2001: A Space Odyssey are not beyond the realms of possibility.

“The basic scenario is explicit or implicit value misalignment - AI systems [that are] given objectives that don't take into account all the elements that humans care about,” said Russell.

“The routes could be varied and complex - corporations seeking a supertechnological advantage, countries trying to build [AI systems] before their enemies, or a slow-boiled frog kind of evolution leading to dependency and enfeeblement not unlike EM Forster's The Machine Stops,” said Russell.

EM Forster's short story tells of a post-apocalyptic world where humanity lives underground and relies on a giant machine to survive, which then begins to malfunction.

Professor Russell said computer scientists needed to modify the goals of their research to ensure human values and objectives remain central to the development of AI technology.

In an editorial in Science, editors Jelena Stajic, Richard Stone, Gilbert Chin and Brad Wible said, “Triumphs in the field of AI are bringing to the fore questions that, until recently, seemed better left to science fiction than to science. How will we ensure that the rise of the machines is entirely under human control? What will the world be like if truly intelligent computers come to coexist with humankind?”